In this article we will explore the major differences between a 3-node Proxmox hyperconverged cluster and a 2-node Proxmox cluster with Replication mode.

Ceph 3 Node Hyperconverged Cluster vs ZFS Node Cluster

1. The concepts of 2 and 3 node clusters

A 3-node cluster is a hyperconverged system, while the 2-node cluster uses replicas to implement high reliability

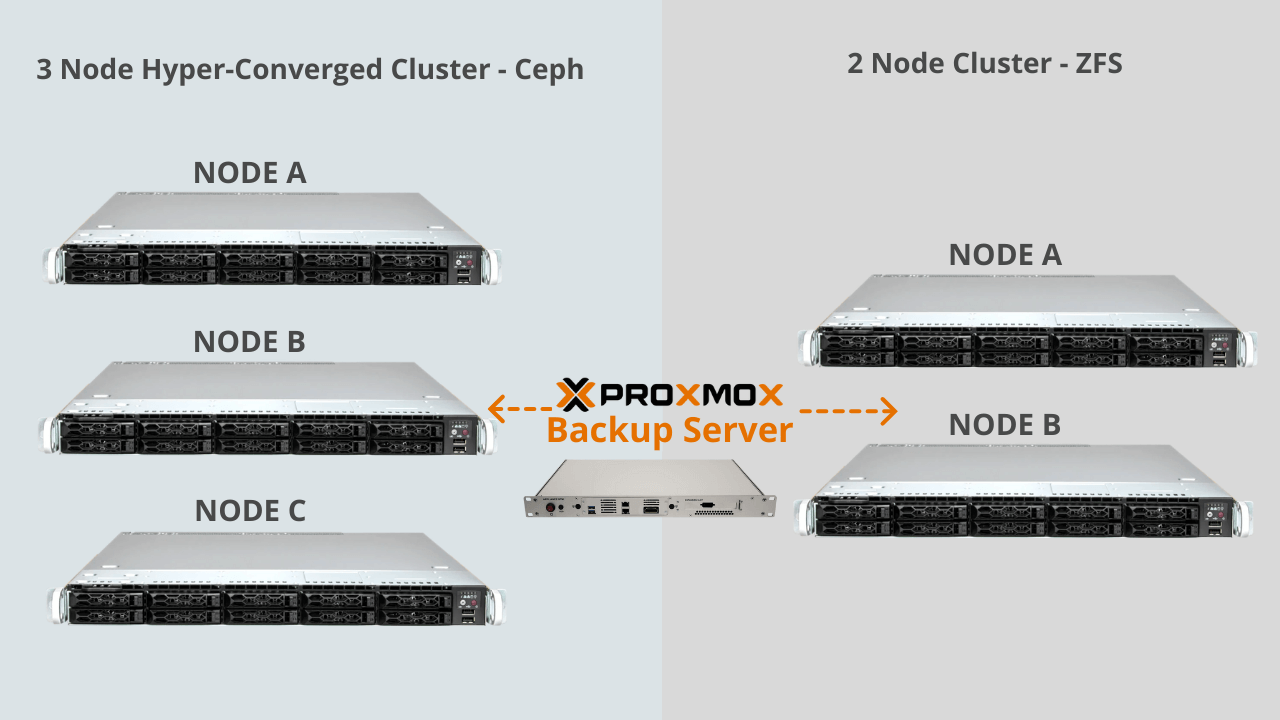

In reality, the 2-node cluster is still made up of 3 nodes, where the third node is represented by the PBS. The discussion of PBS is not the topic of this article, so it will be completely ignored from here on out.

Let’s look at the overall what the difference is between Ceph and the replicas managed by ZFS.

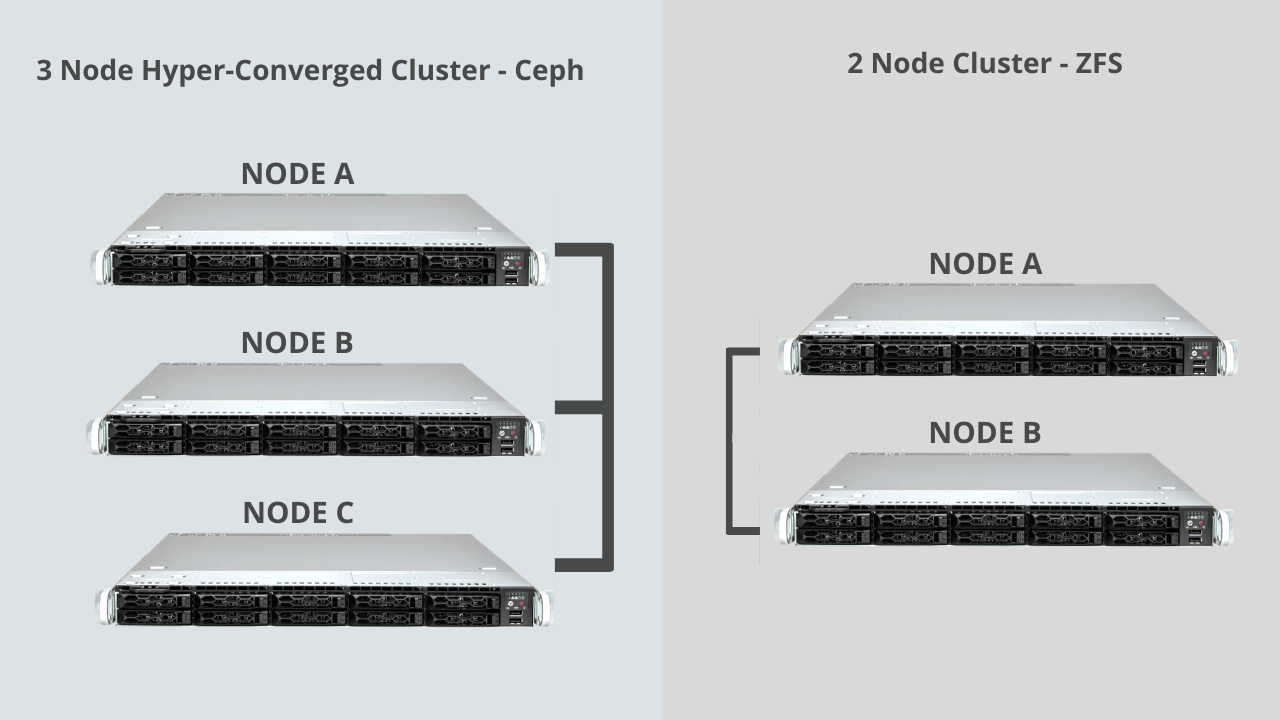

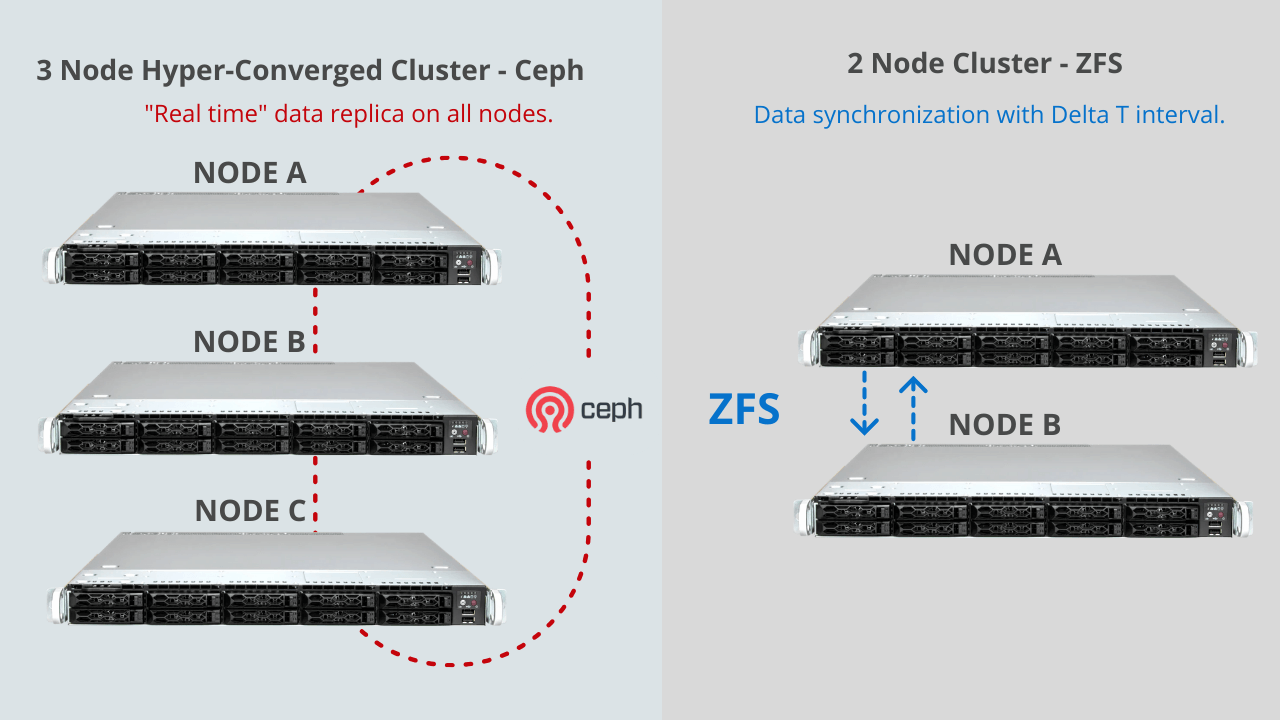

Let’s start with a simple screen to better clarify the concepts. Let’s draw a 3-node cluster, calling them A, B and C.

Then we design a cluster with 2 nodes, namely A and B, and then connect them with a switch. The diagram of the two resulting systems can be seen in the following diagram:

2. How 2 and 3 node clusters are managed

A 3-node Proxmox VE hyperconverged cluster, storage is managed with Ceph framework, while the 2-node cluster is managed with ZFS.

In the case of the 3-node Proxmox VE hyperconverged cluster, a virtual machine running on node A is in the condition in which it is replicated in “Real time” on node B and node C.

In the 2-node cluster, a virtual machine running on node A is replicated on node B and vice versa, through a replication task in a time interval that we have defined as delta T, decided based on our needs .

Below is a diagram to help understand the differences.

We speak of “hyperconvergence” of the 3-node Proxmox VE cluster managed with Ceph, because the data is replicated “real time” on all nodes.

This means that each Proxmox node will see a single storage.

On the 2-node ZFS cluster, we cannot talk about hyperconvergence because data is moved through an asynchronous replication task.

3. Live migration

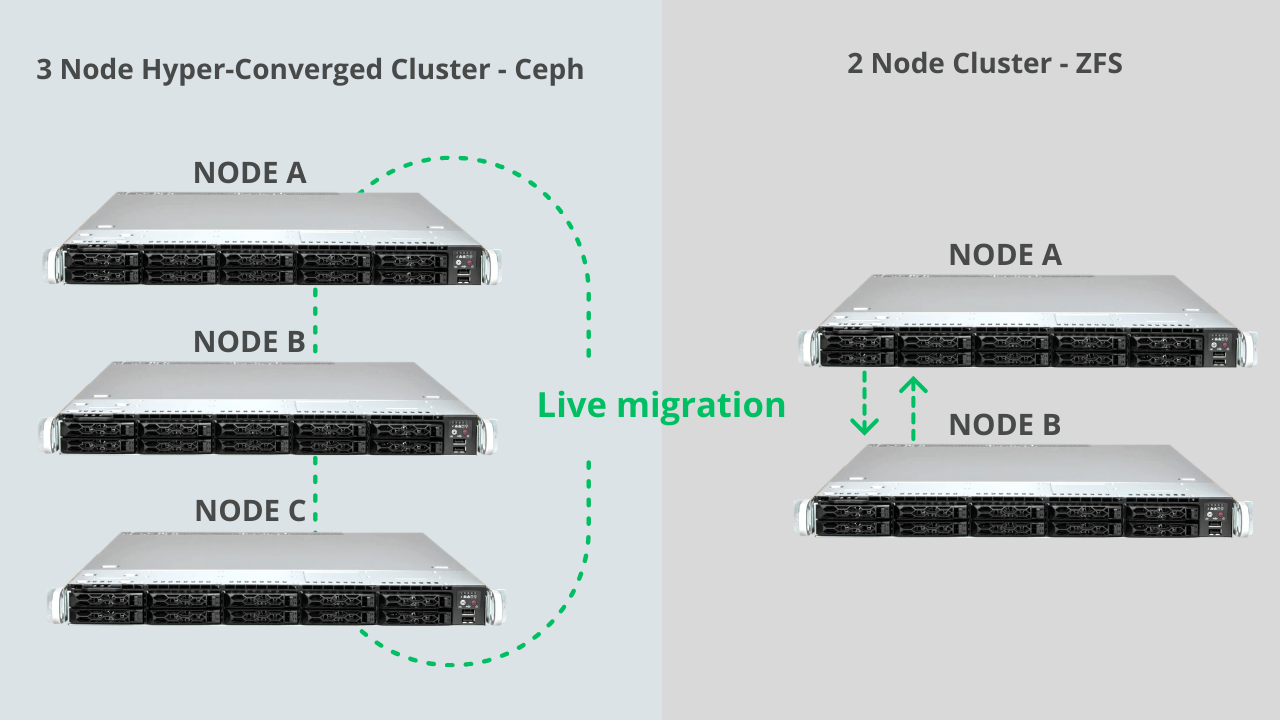

In both cases we can take advantage of the “live migration” function, which offers the possibility of moving a virtual machine from one node to another, without worrying about where the data actually is.

You can move a virtual machine from node A to node B of a 2-node ZFS cluster since Proxmox VE supports live migration.

If there is already a replication task configured from node A to node B, at the time of live migration, Proxmox VE will take care of moving the latest data incrementally.

Live migration can also be done on hyperconverged clusters. Furthermore, by having data synchronized in real time, the virtual machine can move faster without performing incremental synchronizations as in the case of the 2-node cluster.

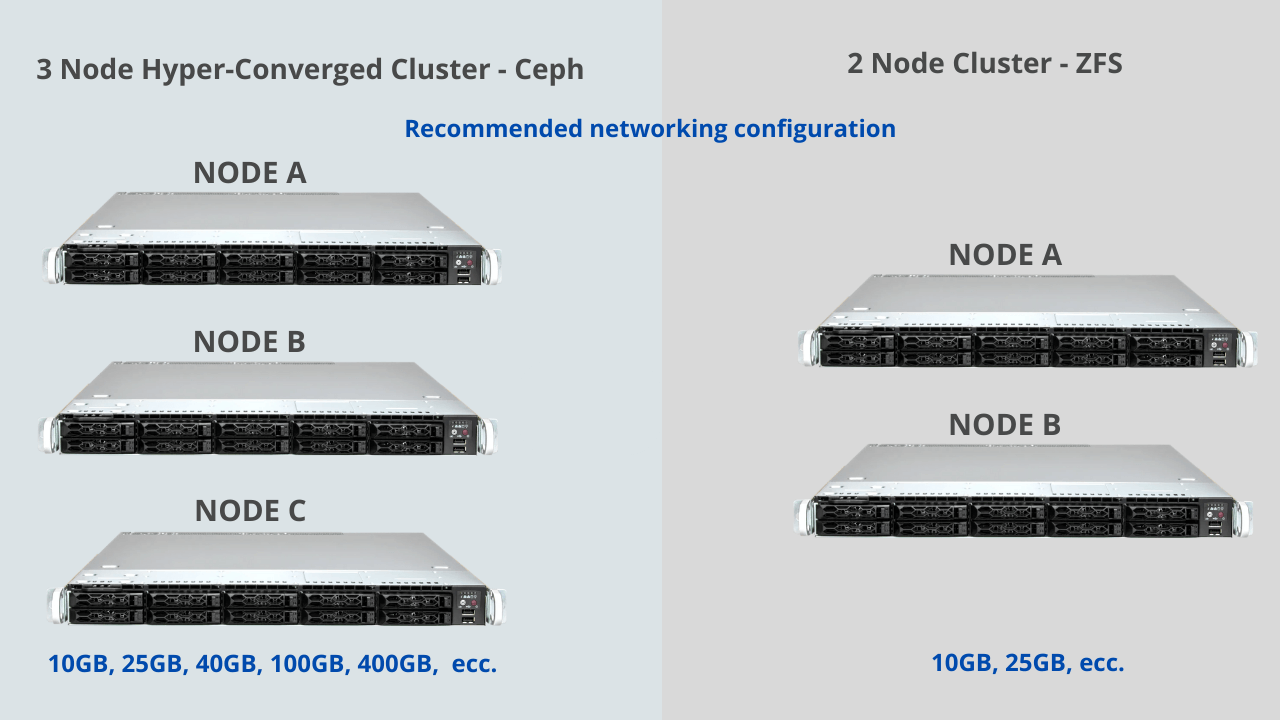

4. Networking tips

In the case of a hyperconverged system, networking will certainly be more demanding from a performance point of view.

Ceph requires high performances, therefore a configuration starting from 10GB and above is recommended. Better if 25GB, 40GB, or 100GB.

To manage a 2-node cluster, a 10GB or 25GB connection will be sufficient for excellent performances.

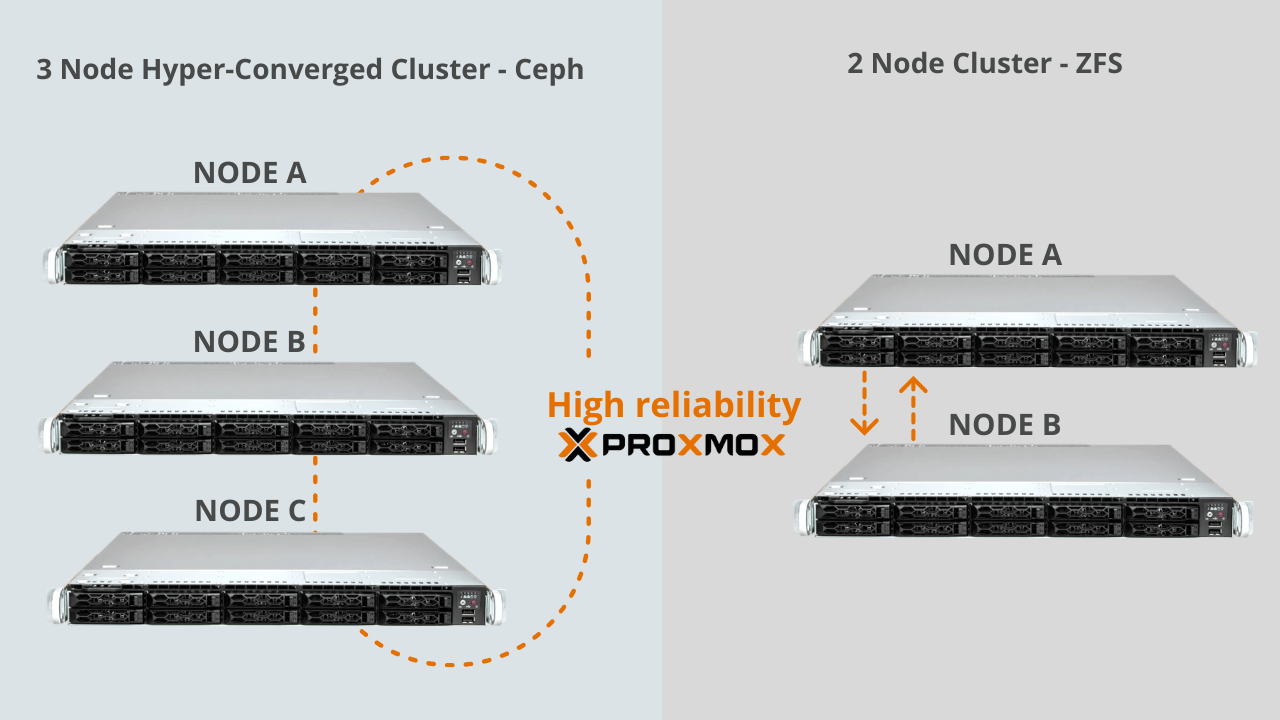

5. High reliability

A hyperconverged cluster using Ceph has a minimum of 3 nodes. This guarantees high reliability.

In the case of a cluster managed with ZFS, the minimum number of nodes is 2, even if the quorum will only be reached with 3 nodes, which is why it is necessary to equip our cluster with a PBS (or a third node).

In both cases, high reliability of Proxmox VE is guaranteed. High reliability can be configured on Proxmox VE panel for both the 3-node cluster and the 2-node cluster.

High reliability is the system through which Proxmox VE can migrate virtual machines in the event of a node fault.

In a 2-node ZFS cluster, we need to consider replication tasks from one node to another. As mentioned before there will be a difference in data resulting from the time that passes between one synchronization and another.

In the worst case, in the event of a node fault, a quantity of data produced over a delta T time could be lost. Therefore, the RPO (Recovery Point Objective) and RTO (Recovery Point Objective) parameters must also be considered. ) that the company can support.

In the case of the Proxmox VE 3 Node hyperconverged cluster, you don’t need to worry much because data is synchronized in real time on all nodes.