In this article we wil cover the sizing ofa 2 node Proxmox VE cluster.

1. Operating principles of a 2 node cluster

The 2 node cluster actually consists of three parts. It’s called a 2 node cluster because it has two processing nodes (pve1 / node 1, pve2 / node 2) that run the containers and virtual machines.

The third node maintains quorum. The minimum number of nodes for a true cluster system is 3.

The third node is not a processing node but a Proxmox Backup Server and is used for backups.

Unlike the 3 node cluster, the 2 node cluster is not hyper-converged, so it doesn’t use the Ceph framework.

Redundancy of the resources between the 2 nodes is managed by a replication system: all resources running on Node 1 are replicated on Node 2 and vice versa.

2. The resources needed for correct sizing

Let’s take a concrete example of configuration. Similar to the sizing of a 3 node Proxmox VE hyper-converged cluster, let’s look at the characteristics required by the VMs and CTs that populate the cluster.

This will help to understand how many resourses and what resources the cluster will need and in particular the individual nodes.

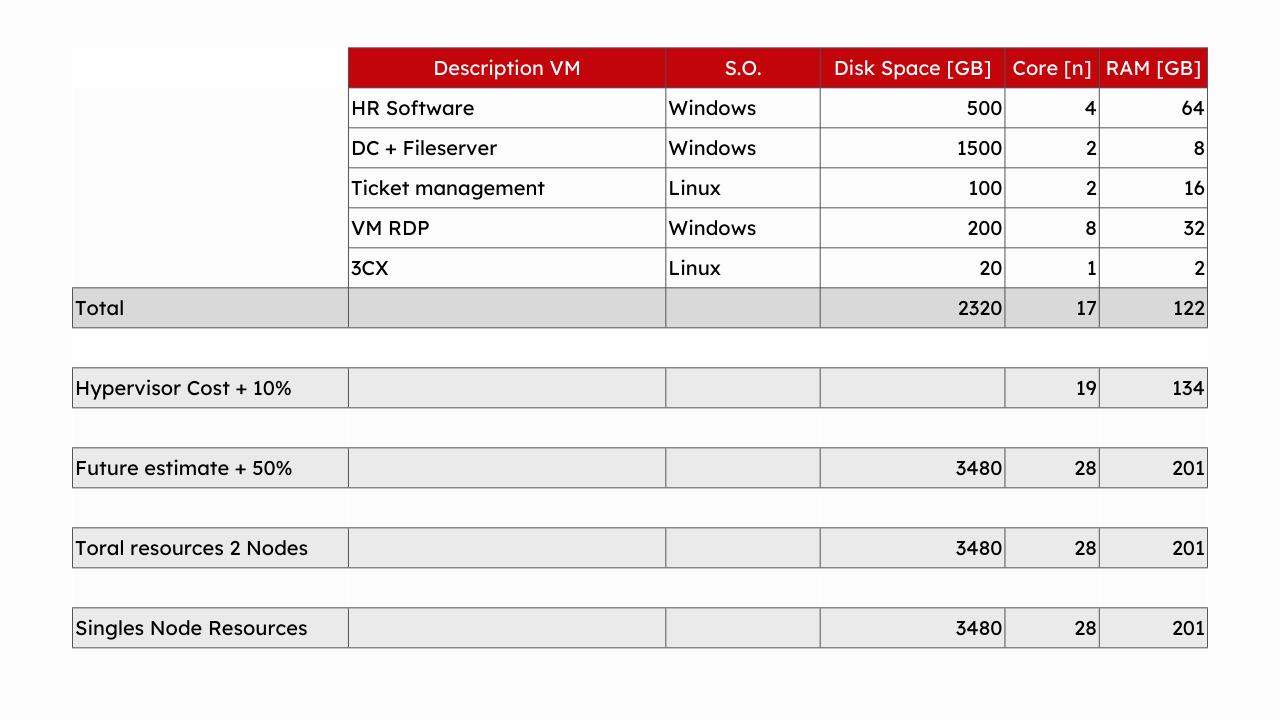

In the following example, we have simulated the classic needs of a small/medium company:

HR Software, a domain controller with file server, a ticket management system, a RDP VM (Virtual Machine) on Windows and a 3CX Phone System.

For each of these machines we have reported the required space, the number of cores and number of RAM GB.

We get the totals column trivially with sums, and then add between 10% and 15% dedicated to the hypervisor.

If you design a cluster today, consider it will remain in production for a few years (from 3 to 7-8 years), depending on company policies.

It is likely that the cluster load will increase: because operating systems demand more resources or because company management asks you to install new machines.

It would be wrong to size a cluster thinking only of today’s needs. You have to calculate some leeway for the future.

In this example, we calculate a 50% future growth.

With the total resources for the two nodes and make the following argument: for the 2 node cluster to always be functional, it must enable operation with all the load (VM and CT) on a single node which needs to handle all the load.

If this condition is not met, the concept of redundancy and Fault Torerance, which is the basis of the cluster, is lost.

For instance, if you do maintenaning one node 1, you can move the resources from Node 1 to Node 2 while running. That is, while users are working.

Ad esempio, nel caso di manutenzione node 1: basterà spostare le risorse dal Nodo 1 al Nodo 2 a caldo (ovvero mentre gli utenti lavorano).

At the end of maintenance work, you transfer the resources back to node 1 thus balancing the load on the 2 nodes again.

In our example, the single cluster node resources will be about 3.5 TB of data storage, 28 Cores and 200 GB of RAM.

Once hardware characteristics of the single node have been defined, proceed to request a quote to get a financial quantification of the investment.

Let’s now choose one of the 2 node Proxmox VE clusters to size it. We’ll choose the of the M1N2 Cluster.

If unsure, leave the default values selected. We will take a look at the quote before sending it to you.

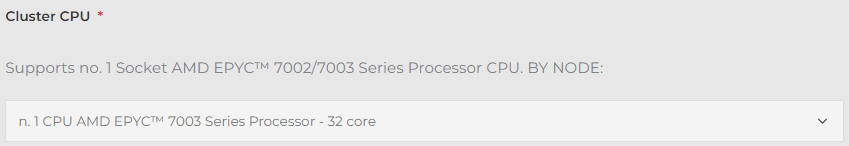

3. The number of cores

This cluster is made with two nodes and Proxmox Backup Server as mentioned above before.

Select the number of cores (from the exampe it came to 28). You can round up or down, depending on what you need. In this example we selected 32 cores.

We could have also selected 24 cores, bearing in mind that however we could encounter a shortage of resources over time.

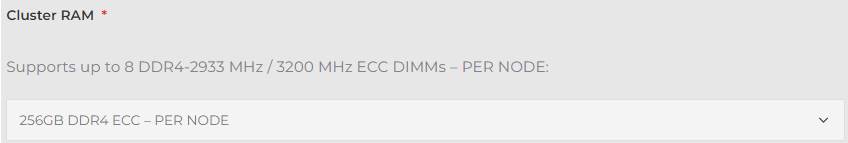

4. RAM del cluster

We round up 200GB of RAM to 256GB of RAM.

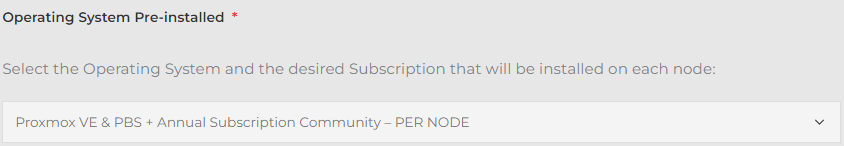

5. Operating system

Select for example a Proxmox VE Community Edition subscription.

6. Operating system storage

For the operating system 128GB of space is more than enough.

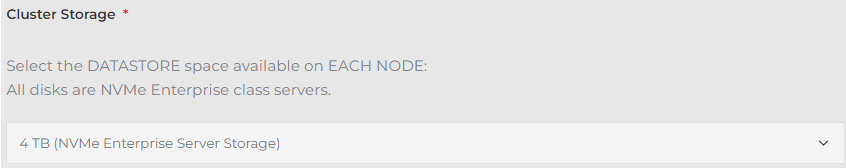

7. Cluster storage

4 TB would be closer to 3840 GB.

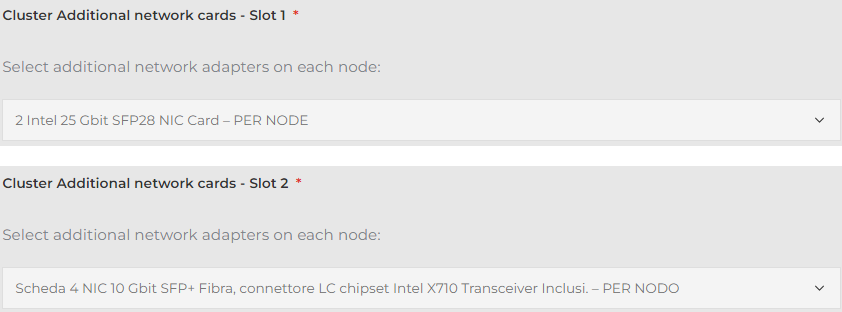

8.Additional network cards

By default we selected 2 25 Gb NICs and 4 10Gb NICs in fiber, because at least 6 NICs are required for clusters of this range. Clearly each configuration must be redundant, so that a port failure cannot affect system functioning.

25 Gbit NICs are typically used for cluster management and backup. 25 Gb speeds are designed to never be saturated by system operations.

For example, disks used will never be able to reach the speed of 25Gb, therefore won’t saturate the network.

The other 2 NICs fiber will be used for virtual machines, while the other 2 NICs for replicates.

On high-end 2 node clusters, 6 NICs are normally used and on entry-level clusters, 4 NICs can also be used, aggregating the functions described above.

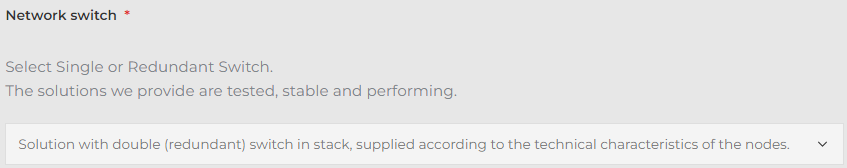

9. Network switch

The network switch is chosen based on the configuration selected above. To have redundancy on the whole network, we recommend the double switch solution.

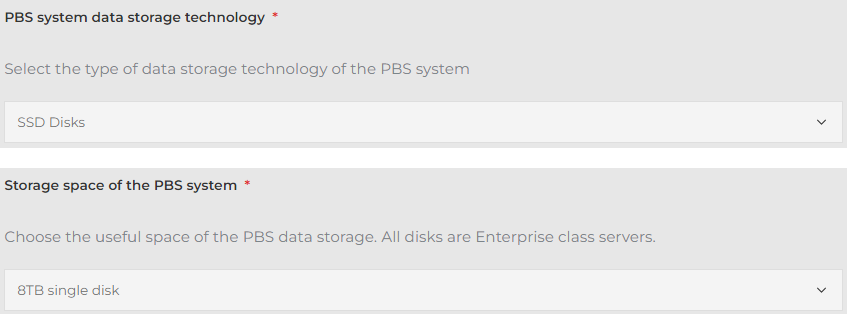

10. PBS storage

Please note that Proxmox Backup Server is a node that is part of the cluster, therefore basically two types of NIC will be used: one will be used for backups and the other for the cluster.

To get a fully redundant configuration you will need a PBS with at least 4 NICs. Basically we will always install (unless we manage a very small amount of data) a PBS with 4 NICs.

To calculate the PBS storage space, the reasoning is very simple. Multiply by 2 the storage present on the Proxmox Cluster. For more accurate sizing, versioning and retention of backup versions should be considered.

If you don’t have special needs, calculating twice the size of the single cluster node is a good compromise.

The choice between NVMe, SSD and SATA disks is mainly about the performance speed you want to achieve during backup and restore phases.

NVMe and SSD disks always allow you to maintain excellent speed in both phases.